Recently I started a code refactoring exercise for some SharePoint code and here I will lay out a very simple architecture that I have implemented for creating web part views. I want to start here because this is the beginning. In a future post I will show you how to refactor this code to introduce more and more patterns of encapsulation to show you how we can grow to accommodate new needs.

The cost of impedance mismatches

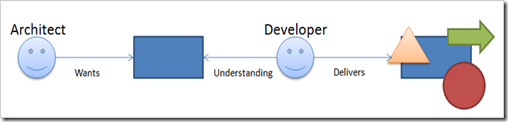

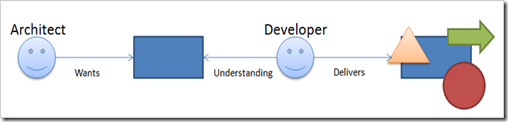

Remember in the first article that I presented an image to highlight how implementations can differ.

A major reason for this difference is that, in the absence of clear protocols and contracts, information can easily be lost during communication of expectations. And in the face of differing expectations and outcomes, the result can lead to changes that are hard to check for conformance and difficult to estimate for the purposes of cost. Additional costs can include excessive rework.

So the implementation that I will talk through in this article is primarily designed to introduce a degree of conformance and consistency into the development process. Whilst the code artifacts that will result from this article may violate some architectural norms, what we will be left with will form a good base from which to build upon and should lend itself to enough abstraction that it can easily be factored and improved over time without making the overall application unnecessarily unstable.

Separating out the database access code

The first thing that I’ll do is to create a custom Command abstraction to encapsulate the execution of my data access code. The Command will fetch data from a repository somewhere and return me some domain objects to work with further up in my architectural stack.

In my application there will be a base Command abstraction that will form the base of more repository specific classes of Commands. Each of these repository specific classes of Commands would have understand how to connect and communicate with repositories of a specific type, e.g. SQL repositories, SharePoint repositories, File System's etc.

And then those repository specific Commands will get sub-classed into specific, domain-specific commands.

At the bottom of the class hierarchy for commands we have a simple abstraction of a base Command class. This class defines a template with an Execute method and returns strongly typed results via a Result property:

public abstract class Command<T>

{

protected T _result = default(T);

public T Result { get { return this._result ; } }

public abstract void Execute();

}

Sitting above the base Command class we have specific abstractions for the various different types of repositories that we are accessing. In the case of SQL repositories, I am specifying via constructor constraints that any SQL commands are dependant upon receiving a connection string and a commands.

public abstract class SQLCommand<T> : Command<T>

{

protected string connectionString = "";

protected string commandText = "";

public SQLCommand(string connectionString, string commandText)

{

if (string.IsNullOrEmpty(connectionString)) { throw new ArgumentNullException("connectionString"); }

if (string.IsNullOrEmpty(commandText)) { throw new ArgumentNullException("commandText"); }

this.connectionString = connectionString;

this.commandText = commandText;

}

public override void Execute() { }

}

And finally, at the top level we have specific commands. Here is an example of a Command which encapsulates the logic for getting information about the daily weather from a SQL repository.

public class GetWeatherCommand : SQLCommand<WeatherInformation> {

const string _commandText = "spGetDailyWeather";

public GetWeatherCommand(string connectionString)

: base(connectionString, _commandText) { } public override void Execute() {

WeatherInformation weather = null; using (var cnn = new SqlConnection(connectionString)) {

cnn.Open();

var cmd = new SqlCommand(commandText, cnn);

using (var reader = cmd.ExecuteReader()) { if (reader.Read()) { weather = new WeatherInformation()

{ Temperature = int.Parse(reader[0].ToString())

};

}

}

}

this._result = weather;

}

}

Over time we can identify ways to refactor our commands by pulling apart and pushing specific pieces of logic further down our Command stack. For example, it may be that the job of creating and managing the lifetime of connection objects are pushed into the base SQL command or that we further abstract the data reading and handling process so that we don't have messy parsing logic duplicated at this level.

Commands return custom entities

Notice that the Command pattern allows for custom entities to be returned. This ensures not only that we enforce strong-typing throughout our solution but also so that we properly abstract and encapsulate our entities and protect our application from changes that we might make such as changing the underlying behavior or version changes such as newly added properties that our entities might take on.

Modelling view logic

View modelling allows us to simplify UI logic - and by separating responsibilities it also helps to keep our HTML "clean". For example, in our example we may have other information that we can infer from our Temperature entity that will be useful to display to our users. An example might be that we want to display a different CSS color based on what range the temperature falls within. In this case we can model that logic in a view model class that we can later bind directly to a UI layout template:

public class DailyWeatherViewModel

{

private WeatherInformation weather = null;

public DailyWeatherViewModel(WeatherInformation weather) {

this.weather = weather;}

public int Temperature {

get { return this.weather.Temperature;

}

}

public TemperatureRange TemperatureRange {

get { if (this.Temperature < 0)

return TemperatureRange.VeryCold;

else if (this.Temperature < 15)

return TemperatureRange.Cool;

else if (this.Temperature < 25)

return TemperatureRange.Mild;

else if (this.Temperature < 35)

return TemperatureRange.Hot;

else

return TemperatureRange.VeryHot;

}

}

}

public enum TemperatureRange : short

{

VeryHot,

Hot,

Mild,

Cool,

VeryCold,

}

Looking at the Core project now we can see that code that was required so far. Here we see that we have our SQL Command class which will return an entity and that we also have a separate abstraction for our view logic.

Separating view layout from code

Separating view layout from code

By using a HTML Template for the layout view we get benefits such as having designers who are able to work on the user interface and also a good separation of concerns between layout and application logic.

In the case of this Weather component, I might initially choose a simple layout as shown in the following code snippet, but it is still a simple exercise to change this over when the designer comes back with a new layout template later.

<div id="BirthdayText">

The temperature is: <%= this.Model.TemperatureRange %>

<img src="/_layouts/<%= this.Model.TemperatureRange.ToString() %>"

alt="<%= this.Model.TemperatureRange.ToString() %>" /></div>

The code behind for this HTML template might look something like this standard piece of ASP.NET codebehind:

public partial class DailyWeatherControl : UserControl {

public DailyWeatherControl() { }

DailyWeatherViewModel model;

public DailyWeatherViewModel Model {

get { return this.model;

}

set { this.model = value;

}

}

}

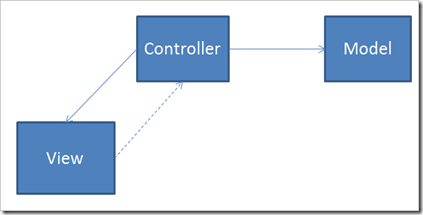

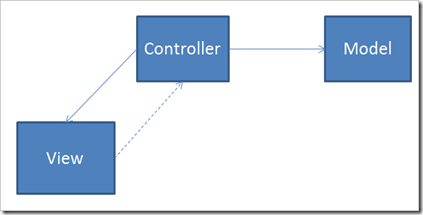

Web part as the coordinator

Back in part 1, I mentioned that I look at Web Part code to only have up to 120 lines of code. These lines of code will be somewhat dependent on how much event handling your web part has to do, but you can use 120 lines as a baseline for a simple Web Part which simply renders data and handles little or no user input.

In such a case the Web Part is responsible for orchestration and coordination of the flow of control. The Web Part will create instances of commands and view models; it will instantiate layout templates and pass the view models to them. Very simple indeed.

For our Web Part class...

public class DailyWeatherWebPart : System.Web.UI.WebControls.WebParts.WebPart {

protected override void OnLoad(EventArgs e) {

base.OnLoad(e); this.EnsureChildControls();

}

protected override void CreateChildControls() {

base.CreateChildControls();

var connectionString = ConfigurationManager.ConnectionStrings["AppSqlConn"].ConnectionString;

var weatherCommand = new GetWeatherCommand(connectionString);

weatherCommand.Execute();

DailyWeatherControl weatherControl =

(DailyWeatherControl)Page.LoadControl("~/_controltemplates/Neimke/WebParts/DailyWeatherControl.ascx"); this.Controls.Add(weatherControl);

weatherControl.Model = new DailyWeatherViewModel(weatherCommand.Result);

}

}

From here we might eventually decide to abstract further the complexities of template instantiation and error handling to another base class.

What have we achieved?

I mentioned at the start of this article that the architecture that we created would provide us with a good base from with to establish norms and standards. And that what we create should lend itself to improvement through further refactoring. And you will recall that I sought to provide a solution to having developers who produce expected outcomes.

Given the architecture that I have presented, I could now expect that, when I ask for web part to be developed, I already know what the delivery should look like:

- A Command

- An Entity

- A ViewModel

- An HTML Layout Template

- A Web Part class

This makes the job of passing or failing code based on conformance much simpler. And by being able to have such disciplines at play within our development team should ultimately lead to higher quality outcomes.

The final layout of our solution looks like this:

Where to from here?…

Where to from here?…

In the next article I want to take the code refactoring deeper to look at how we might introduce new patterns that will allow us to increase the testability of our code.